- July 18, 2023

- by Sridhar Rajagopalan, Co-founder & Chief Learning Officer, Ei

- Blog, Category, Newest

- 0 Comments

Key Principles for Creating High-Quality Assessments

Key Principles for Creating High-Quality Assessments

Student assessments are a key part of the education system. The data and insights they generate provide valuable feedback on the performance of students, teachers, schools, and the education system in general. Additionally, because stakeholders in the system place great emphasis on good performance in examinations, improving assessments can have positive upstream effects across the system. ‘Teaching to the test’ can promote learning if the test is a good one.

Over the last two decades, Ei has created thousands for assessments at the school level. We have also studied the assessments used in our schools as well as the school-leaving exams. Based on our collective experience over this period, we share certain key principles that, if adhered to, can improve the quality of assessments (and consequently of student learning).

These principles are:

- Testing key concepts and core knowledge, not peripheral facts

- Using questions that are unfamiliar either in the way they are framed and/or their context

- Having questions covering the entire range of difficulty in a paper

- Ensuring that difficult questions are based on ‘good’ sources of difficulty

- Using authentic data in questions

- Avoiding narrowly defining a large number of competencies and then only having questions testing individual competencies

- Using different questions types to test different aspects of student competencies.

- Providing test creators access to past student performance data, to use while designing questions

- Designing answer rubrics to capture errors and misconceptions

- Publishing post-examination analysis booklets

- In large-scale exams, reporting results using scaled scores and percentiles

Assessments are and shall continue to remain a ‘north star’ that guide the actors in the education system. They will therefore continue to have an outsized effect on the priorities of stakeholders. Adhering to these principles, we believe, will help create well-designed assessments that will not remain just a ‘necessary evil’, but positively influence the education system, and subsequently our workforce and society.

Principle 1. Testing key concepts and core knowledge, and not peripheral facts:

Examinations should primarily test a student’s understanding of key concepts. It is also okay if they test for certain facts, as long as those facts are core to the subject. For example, the concepts of compounds and mixtures and the difference between them represent a fundamental understanding about matter. The fact that the Earth’s axis is tilted from the perpendicular of the plane of revolution is a core fact which is okay to test. On the other hand, the amount by which the earth’s polar circumference is less than its equatorial circumference is an unimportant one and should not be tested. Yet many of our exams test peripheral or trivial facts like these.

Trivial facts should not be tested not just because they can easily be looked up on any mobile phone, but also because these trivial facts may displace core understanding. One way to test if a certain question is a valid one to ask is to check if a high percentage (say 70%) of practicing experts of the subject will answer it correctly. Every physicist will know the difference between compounds and mixtures and all about the tilt of the Earth’s axis, but most geographers would probably NOT know that the polar circumference is 72 km less than the equatorial circumference. Questions that test reasoning and higher-order thinking are also more appropriate to real-world tasks and challenges today and hence more important.

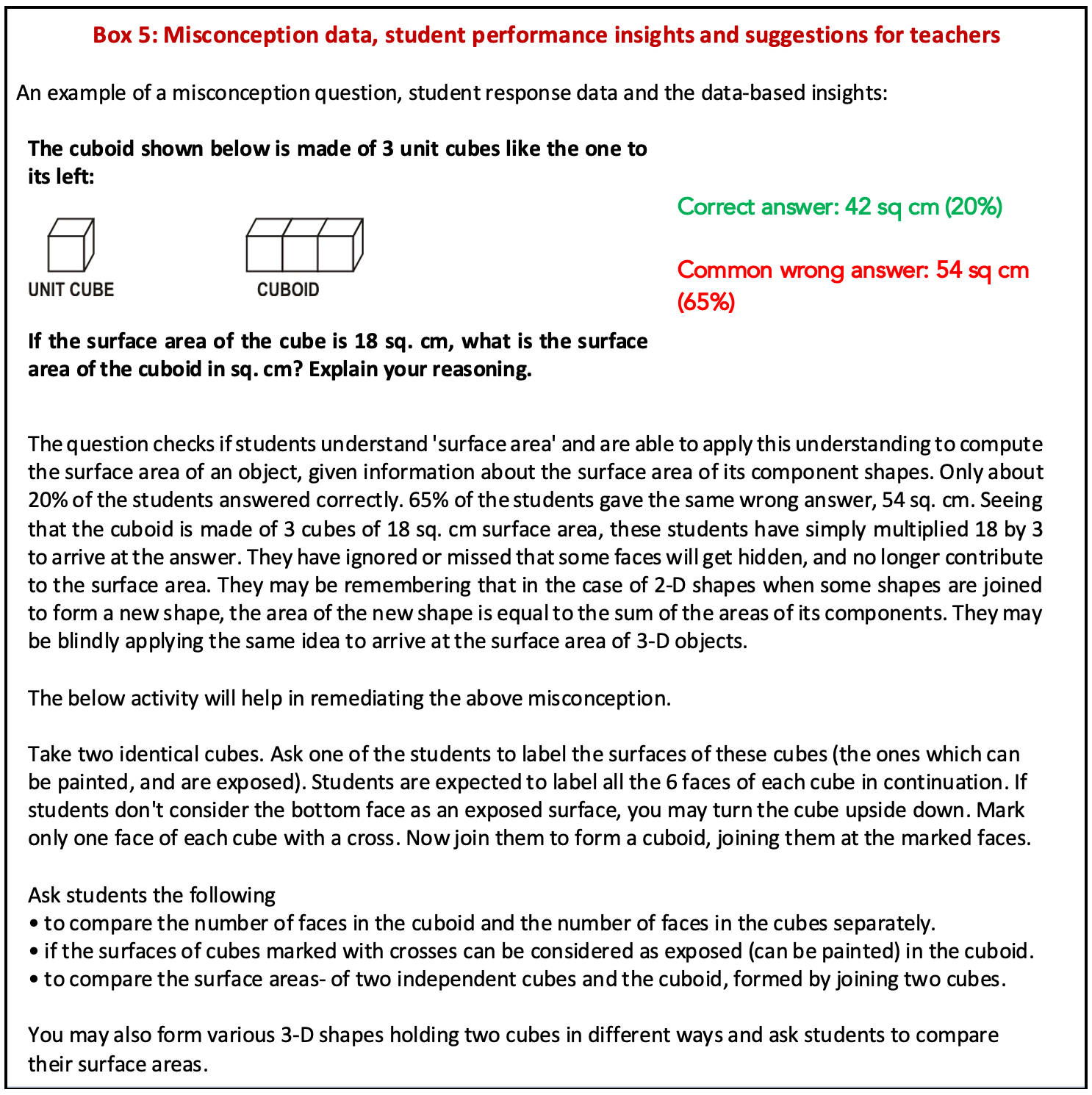

The figure below shows a question testing mechanical learning vs. real learning with understanding.

Figure 1: Asking the definition of a peninsula tests for mechanical learning while the alternative shown expects students to understand the characteristics of peninsulas even if they cannot give the textbook definition.

To summarise, not everything that can be asked should be asked. Rather assessments should focus on concepts and some facts that would serve as a foundation for real-life application or future learning.

Principle 2. Using questions that are unfamiliar either in the way they are framed and/or their context:

Most examinations in our country, both at the school and Board level, tend to have questions that are typical or fit a standard form. Questions rarely use unusual or unfamiliar contexts or forms. So students develop the techniques and confidence to answer those standard questions (often by reproducing the solutions in the textbooks). They learn that when they encounter a question in an exam, they should ‘pattern-match’ to check which question they have seen in the textbook or class matches it closest and apply the same procedure. Unfortunately, this actually works and yields the expected result with most questions, so the ‘learning’ is reinforced. Students gain no exposure to having to respond to problems that are presented differently or need to be tackled differently. The process of first trying to understand the problem, then thinking about it and then attempting to solve it step by step, is largely unknown to them.

Thus, this is nothing more than a form of rote learning, where procedures or patterns, if not facts, are memorised. When faced with unfamiliar problems whether in modern tests like PISA, competitive tests or even in unexpected real-life situations, the response is to feel flabbergasted or unprepared or that the question is ‘out of syllabus’. Most students lack the confidence to even attempt such questions.

Whether we want to check whether students have really learned concepts, or we want to prepare them for future exams, testw should contain questions that test a prescribed set of concepts in an unfamiliar way.

What do we mean by ‘unfamiliar’ questions? Questions can be unfamiliar in different ways:

- they may be framed using real-life contexts (e.g. sports, technology, art, music, market transactions) which are not used for that concept in the textbook

- they may be framed in the context of contemporary developments (e.g. COVID-19, cryptocurrency, an important current event) which too would be ‘new’ for them

- they may integrate concepts taught in different subjects (e.g., show a graph recorded by a seismograph during an earthquake and ask a simple interpretation question in a mathematics test)

- they may simply test for conceptual understanding, misconceptions or higher-order cognitive skills in any form that has not been discussed in the textbook

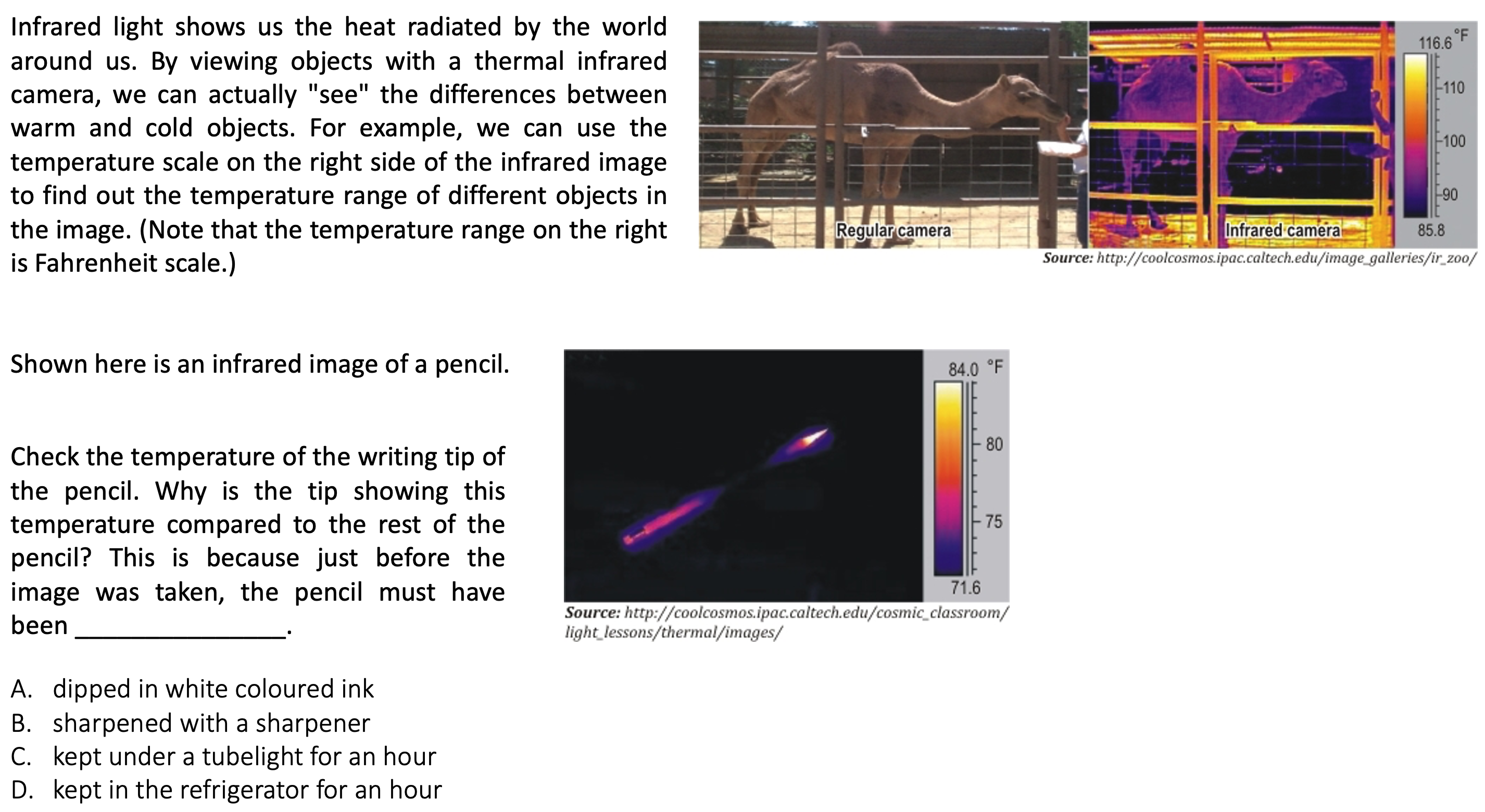

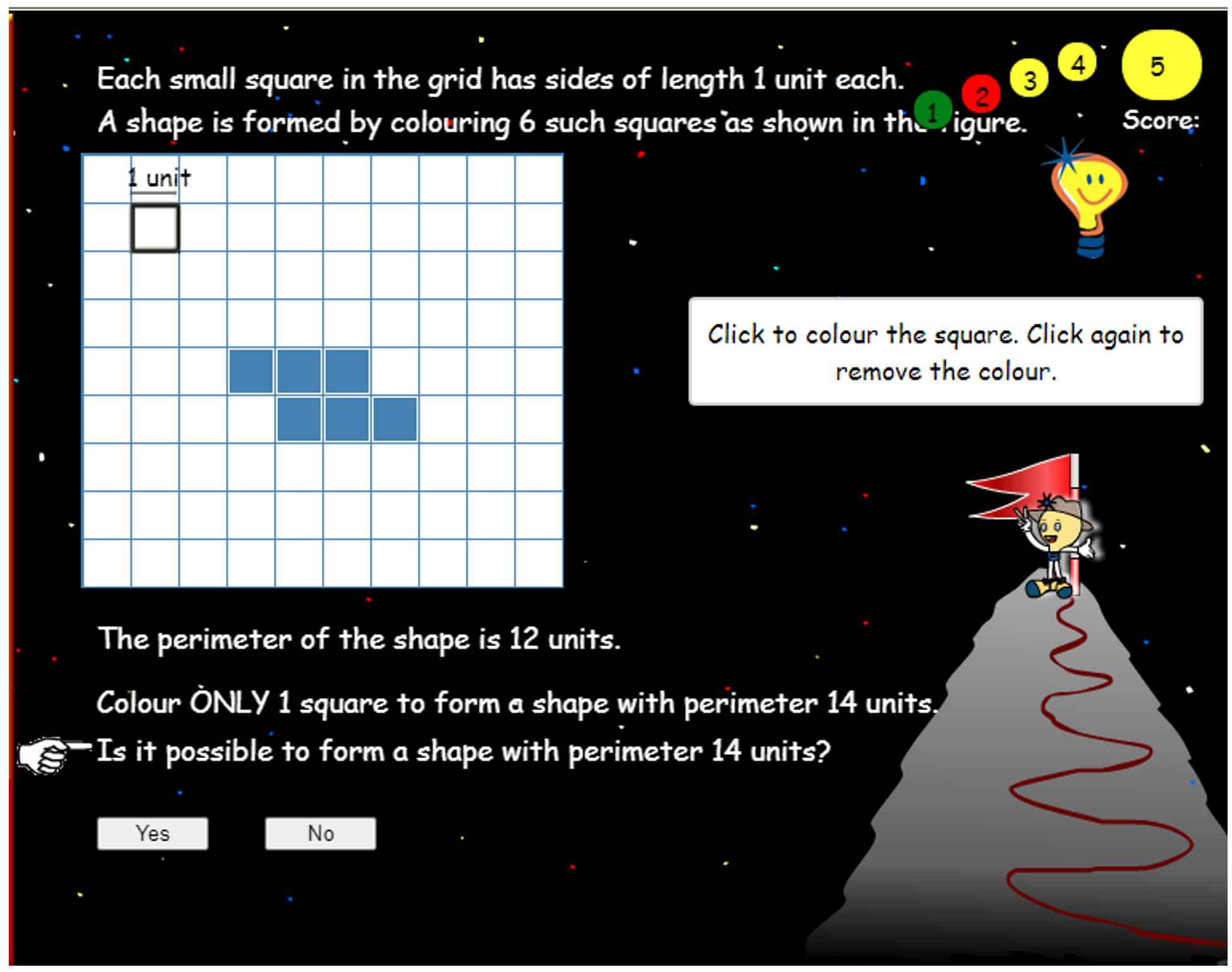

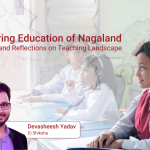

Figure 2: An example of an unfamiliar question that checks for conceptual understanding. The question is different from any typical question asked on a topic like evaporation; in fact, students have to figure out what concept is being tested.

Being able to apply conceptual understanding in unfamiliar contexts is a critical life-skill. Asking such questions in exams would automatically ensure their use in classroom teaching and help develop such skills.

It is important to note two important points about unfamiliar questions: Firstly, they are not necessarily difficult questions. Once students have understood the problem, it may actually be easy to solve. Secondly, all the questions in a test need not be unfamiliar. Up to 30% – 40% of questions can be familiar to students and thus answerable even by weaker students.

Finally, creating such questions may seem challenging, and it does require effort. Some tips are discussed in Box 1.

Box 1: How to create unfamiliar questions based on familiar concepts 1. Any question in an unfamiliar context checks for a concept in a specific context. So you can either start with the concept (e.g. heat, Pythagoras Theorem, food web or climate) or the context (e.g. moon landing, GPS, codes and cryptography or printing a book) But remember that real-life questions often involve multiple concepts and that is good! 2. A good way to develop the skill of creating unfamiliar questions is to study existing questions from tests like ASSET, PISA, TIMSS and others – all of which share examples of such questions. Here is a list of some examples of contexts and concepts that can be tested in those contexts: Table 1: List of real-life contexts and the concepts that can be tested

3. Keeping the context and content in mind, we now create a question where a real-life example is used from the context while also covering the concept to be tested. It is okay if the example is simplified to be relevant to the appropriate age group, as long as it is based on the same principles actually used. See the example below.

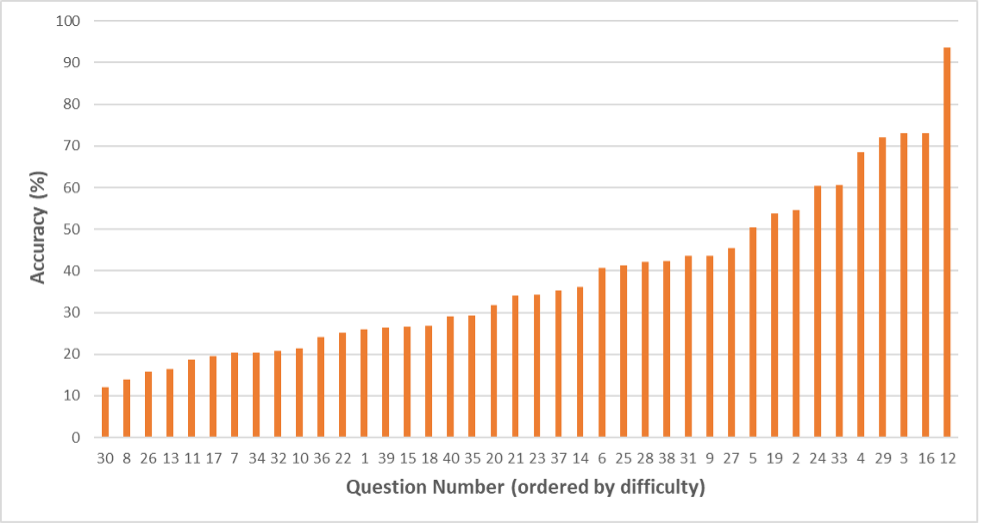

Principle 3. Having questions covering the entire range of difficulty in a paper: A key purpose of most examinations is to discriminate between students of different levels of ability. To do so, they must contain questions that taken together cover the entire range of student ability. Since there will be test-takers with low, medium as well as high ability levels, the examination must be able to properly discriminate between them. To do this, there must be a good mix of easy, medium and difficult questions. Students with poorer knowledge of subject material will be able to solve only the easiest questions, whereas those with a stronger grasp of the subject matter will answer more difficult questions with only the highest ability students being able to answer the most difficult ones. How does one know the difficulty level of questions while setting them? This is not easy and while question makers may estimate the difficulty, these estimates are often not correct. The only way to have this information is to pilot items and record the performance data. (If performance data of past items is available, sometimes the difficulty of similar items can be judged reasonably accurately. Also, if a certain groups of experts are proficient in setting questions and then analysing the actual performance, they develop a good sense of student performance on different types of items – though regular pilots are always necessary.) Currently, it is found that many public examinations tend to have fewer questions at a higher level of difficulty (and in some cases none). For example, the data analysis of one of the past board papers shows that all the multiple-choice questions in the paper have the difficulty parameter in a very narrow range with most of them discriminating among only students with medium ability levels. The presence of too many easy and too few difficult questions skews the distribution of results and also leads to marks inflation (which pushes up college cut-offs and increases pressure on students as a single mark makes a huge difference). Figure 4 shows the difficulty distribution of questions in a recent ASSET paper. The difficulty parameter represents the average performance of the item. Thus there are items answered correctly by over 90% of students while others were answered correctly by only 12% of students. Furthermore, there are items at almost every level of intermediate difficulty.

If questions at all difficulty levels are properly represented in a paper, the student results will also form a normal curve (which is correct as student abilities form a normal distribution). This is a necessary (though not sufficient) condition for a good assessment. Principle 4. Ensuring that difficult questions are based on ‘good’ sources of difficulty: Based on their content, examination questions can be difficult for ‘good’ or ‘bad’ reasons. For example, students may find certain questions difficult because they test multiple skills simultaneously. Such questions encourage students to engage in higher-order thinking and integrate aspects they have learnt. Such questions can be said to be built on good sources of difficulty.

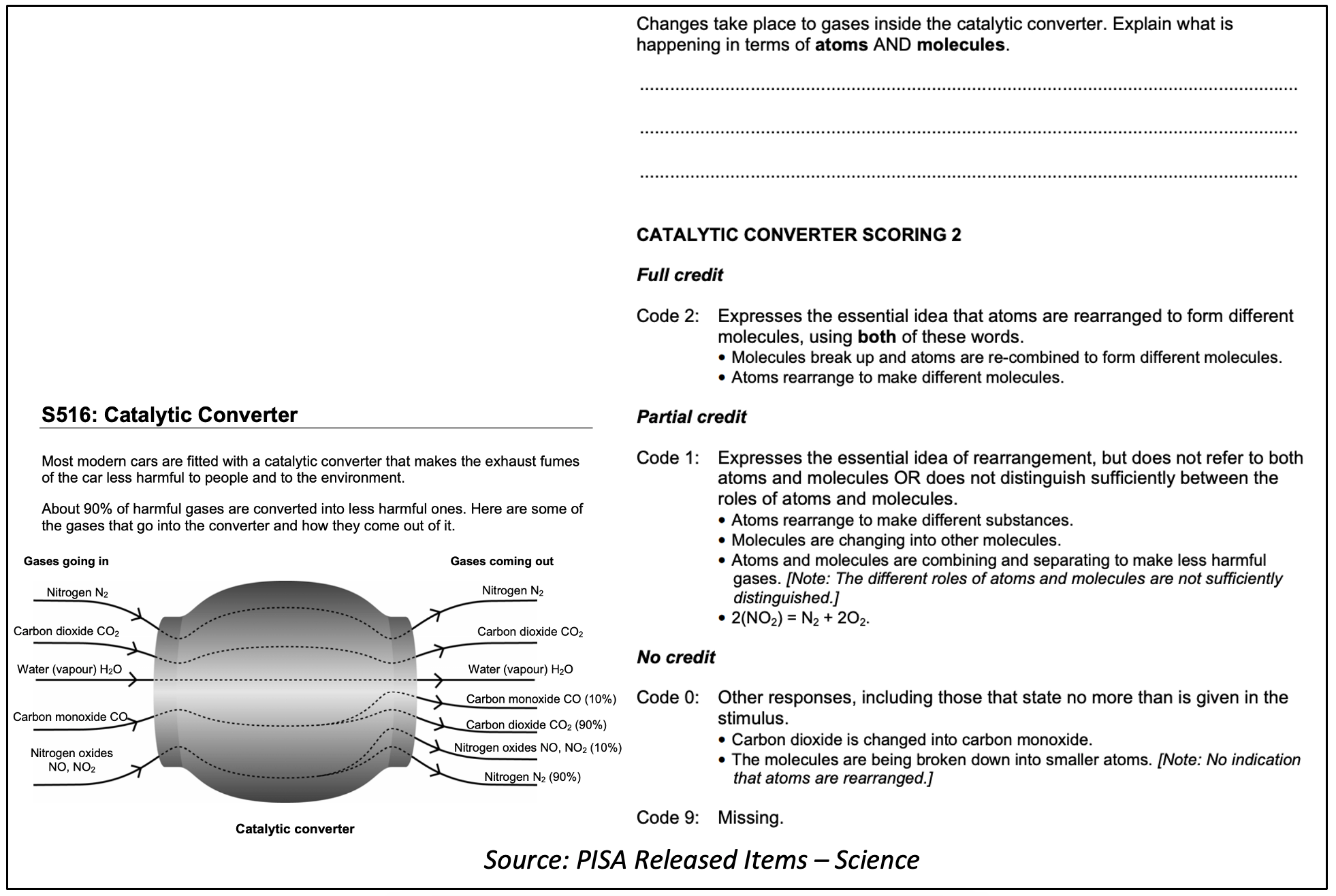

On the other hand, questions that are based on ‘bad’ sources of difficulty may test irrelevant facts or may require students to engage in tedious calculations. While ‘good’ sources of difficulty can encourage meaningful learning, ‘bad’ sources of difficulty may cause students to lose interest in the subject (Box 2 lists some common good and bad sources of difficulty. Further, a good test would also have the different good sources of difficulty represented well and not one of them is overly represented so that at an overall level the test can discriminate well across students of all difficulty levels. Principle 3 highlighted the importance of assessments containing questions across a range of difficulties. Even within this range, though, questions should be based only on good sources of difficulty.

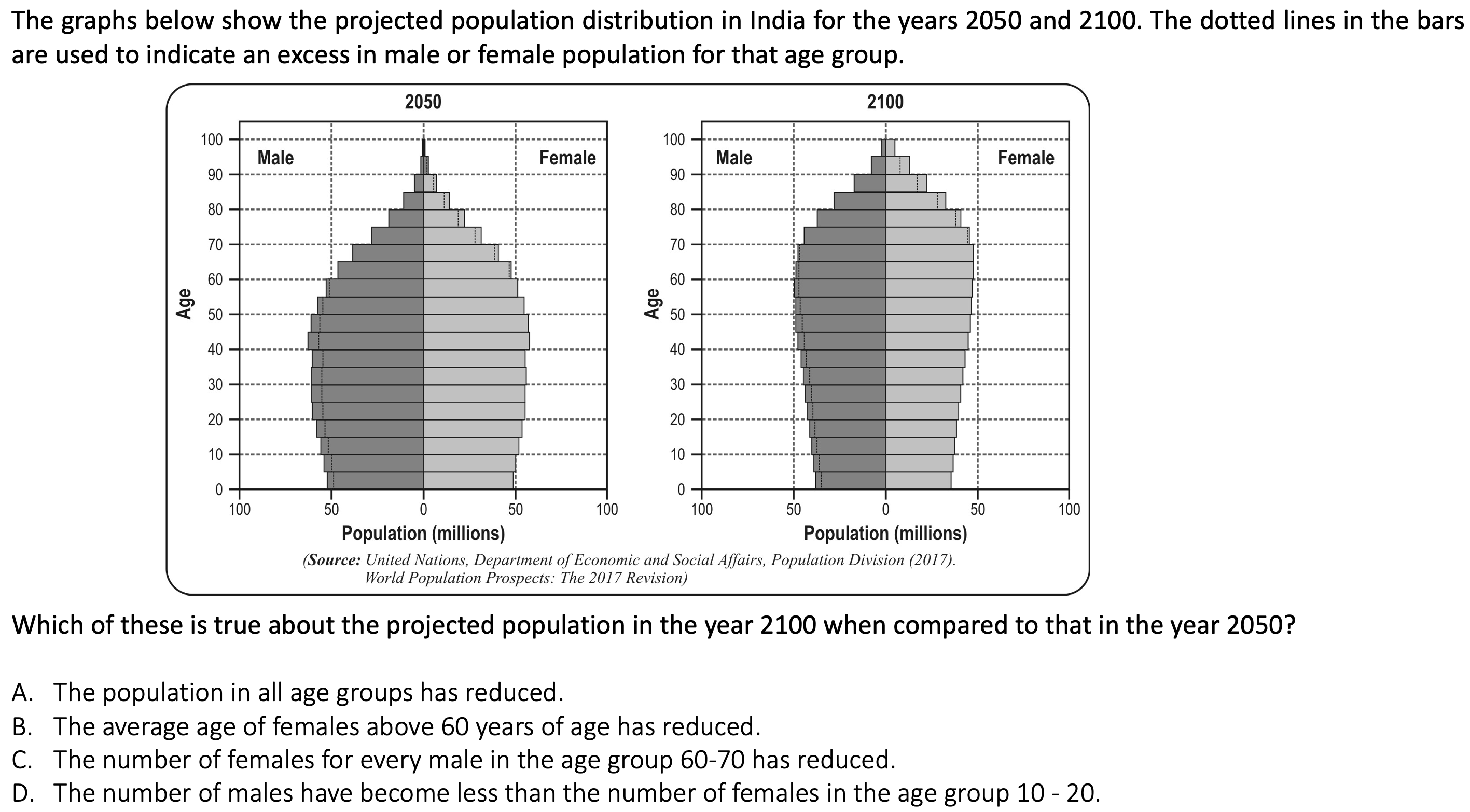

Principle 5. Using authentic data in questions: Questions in exams should, as far as possible, contain authentic data and examples from the real world, even in situations where the use of fictitious examples or data would otherwise suffice. The use of real-life contexts and data in examinations can make questions more engaging, and help students understand the practical importance of their education. Therefore, in addition to testing concepts, these questions become teaching tools in themselves. Their use in examinations will also encourage teachers to structure classroom instruction accordingly. A sample item using authentic data is shown in Figure 5.

Figure 5: A sample assessment item using authentic dataFor example, if scores from sporting competitions are used in a question testing the concept of averages, they should be data from actual sporting events. Similarly, when students studying geography are questioned about plate tectonics, they should be given examples of real tectonic plates and their movements if possible. In language examinations, comprehension passages can be from real texts across domains such as history, science, or economics. Of course, in some cases, the complexity of information may need to be moderated or simplified to be suitable for the targeted class level. Principle 6. Avoiding narrowly defining a large number of competencies and then mapping questions to individual competencies: There seems to be a widespread but wrong notion that good education and assessments require a large number of competencies to be listed for each subject, and individual questions in assessments mapped to individual competencies. Further, some seem to believe that merely doing the above will lead to good assessments and by extension, good education. ‘Competency Based Education’, a laudable goal, is sometimes understood in this narrow sense. In our experience, listing competencies and then mapping questions to competencies are both largely mechanical steps, and may actually increase and not reduce the rote component of an assessment. The belief that students need to acquire key competencies is valid. However, the idea that this can be achieved in a mechanistic manner – first listing competencies and then creating questions or content that maps to those competencies – is flawed. Only the quality of content and assessments can lead to good teaching or learning, not merely a mapping. Particularly in examinations, overly specific mapping leads to the use of narrowly structured examination questions that test only particular competencies, and that too in isolation. In fact, good questions that test multiple competencies are usually excluded in such a process because they breach artificially defined boundaries for competencies, making the paper more mechanical. This problem is present even in ‘advanced’ education systems. In the USA, for example, the Common Core was introduced to establish set standards and competencies for student education, to improve learning outcomes. Though there was a lot more to the Common Core, in many cases, it was treated by teachers merely as a list of standards to be rigidly focussed on through lessons or questions.[1] While examination boards must establish a set of necessary skills, concepts, and learning outcomes to guide the education system, they should not be overly prescriptive in how questions test them or aim to break them into very fine sub-categories. Principle 7. Using different questions types to test different aspects of student competencies: We often hear debates and arguments about how certain types of questions (say objective or subjective, or multiple-choice questions) are inferior or superior to other types of questions. However, the reality is that each question type has its strengths, weaknesses, and suitability based on the subject and the goal of the assessment. It may be said that a comprehensive assessment will have a mix of various types of questions, each used for its own strengths, as described in Box 3. [1] Loveless, T. (2021, June 3). Why common core failed. Brookings. Retrieved January 24, 2022, from https://www.brookings.edu/blog/brown-center-chalkboard/2021/03/18/why-common-core-failed/ |

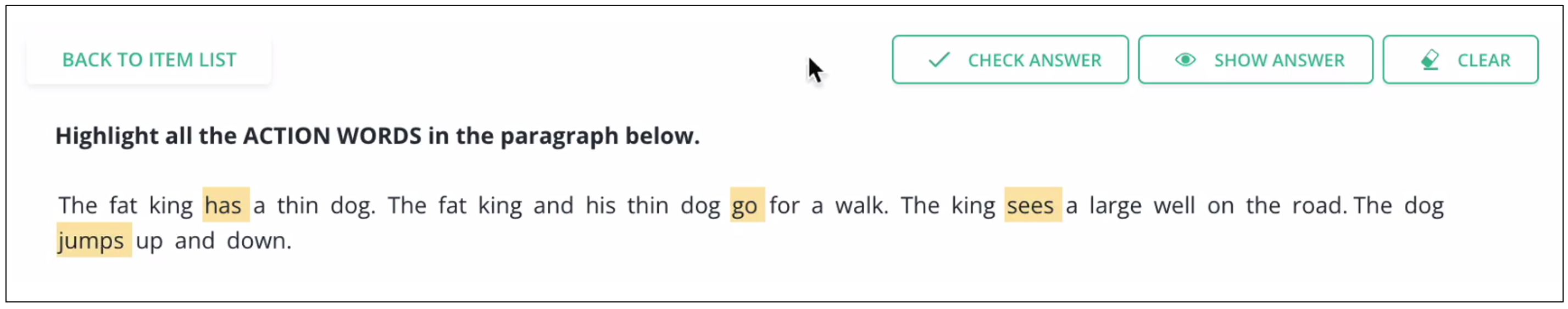

Box 3: Different strengths and uses of different question formats Different question formats have their own strengths and weaknesses and may be suitable in different situations: Multiple-choice questions (MCQs) capture students’ misconceptions or common errors if framed well. They can be created very scientifically and scored quickly but good MCQs require effort and experience to create. They can test higher-order thinking skills too though badly designed MCQs often just test facts and may serve little purpose. True/False questions are easy to frame and score but encourage guessing, given the 50% chance of getting them correct. Asking for an accompanying justification can help gauge student thought processes. Blank or short answer questions are easy to frame and can be used to test key facts, terms or principles. They can be an effective method to test if students know and can express an answer concisely. Long answer or essay-type questions can test a student’s ability to analyse information, synthesise different facts and ideas, etc. They are often the best question type for this objective. They also provide nuanced information on misconceptions and are easy to make. However, they take greater effort than other question types to evaluate and require a detailed rubric for proper marking. It is almost impossible to reduce an element of subjectivity in their correction, though. Technology Enhanced Items (TEI) can improve test-takers engagement through the use of visual or auditory aids, for example, and provide a detailed diagnosis with standard correction. But they require time and skills to create and digital infrastructure to administer to students. Some examples of TEIs are shown in Figure 6.

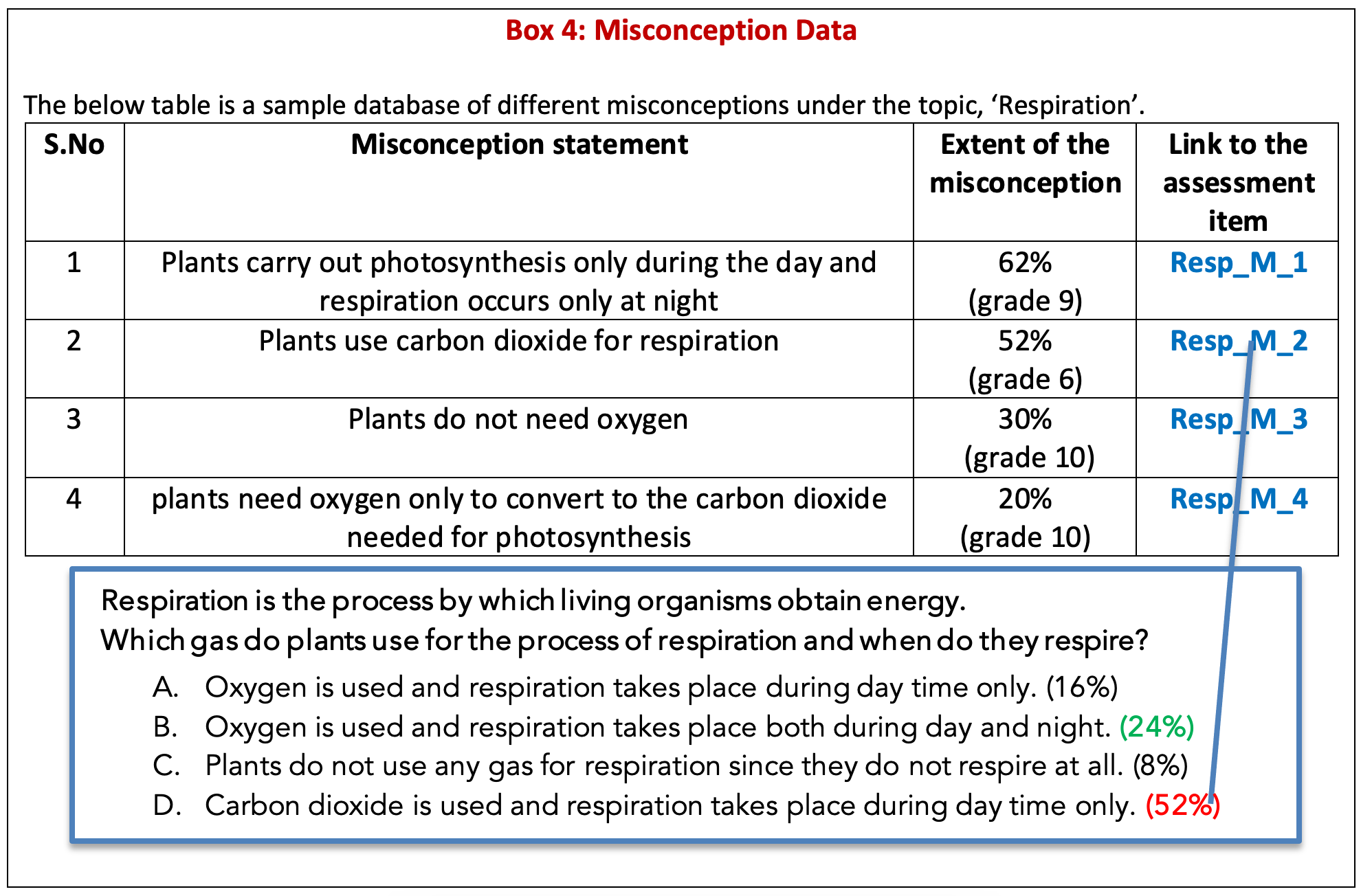

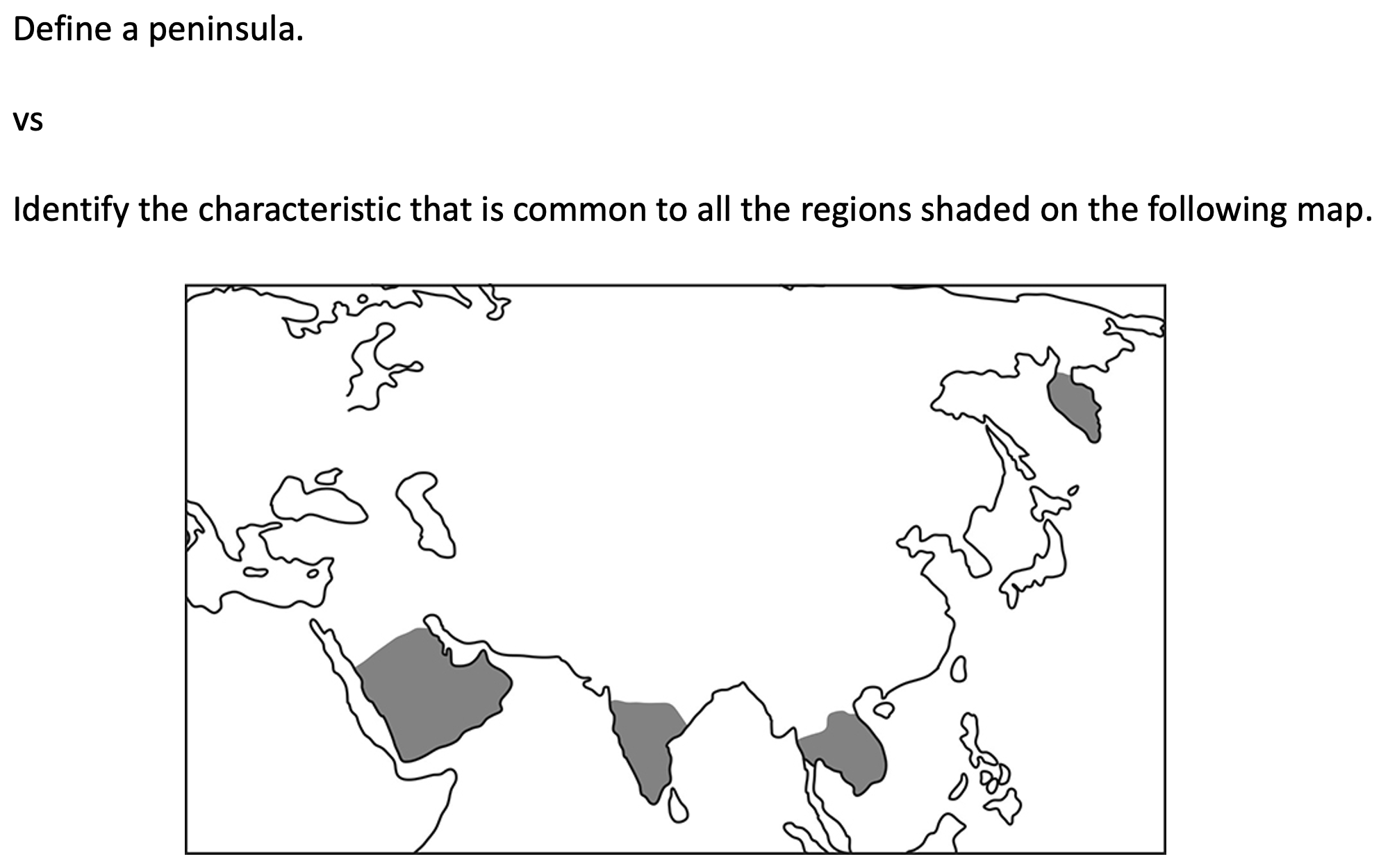

Figure 6: Technology-enhanced items from mathematics and language. Students interact with such questions which not only record details of these interactions but may also adapt based on student responses.Principle 8. Providing test creators access to past student performance data, to use while designing questions: Especially for large-scale or summative examinations, test creators should be given access to data on past assessments. This will provide them insights of two types – one, about what items worked and issues, if any, with items and two, about the kinds of student responses and errors. Knowing which items functioned well and which did not helps create better items for future assessments. Item data may indicate difficulty, discrimination, the extent of guessing, what ability students answered the item correctly and the wrong responses students gave. Though all of this data may not be available for every question, each piece of information provides valuable insights. As mentioned earlier, past item data also helps question makers estimate the difficulty of similar new items and thus create questions of varying difficulty in the paper. (Knowing areas of student error is useful not just for assessment creators but teachers as well. Principle 10 below talks about the benefits to teachers and future students when this data is shared with them in the form of post-examination assessment booklets.) Having misconception data in the format shown in Box 4 helps in developing good assessment items testing misconceptions and in creating plausible distractors.

|

- About the Author: Sridhar Rajagopalan is Co-founder & Chief Learning Officer of Ei