- December 4, 2023

- by Educational Initiatives

- Blog

- 0 Comments

The article was originally published in Hindustan Times on 3rd October: Read the original article here

The idea of a Common University Entrance Test (CUET) is inherently a good one. Since admissions to colleges test for similar subject proficiency, there is no need for multiple tests when one good test — conducted well — can bring about standardisation and fairness. However, the key phrases here are “good test” and “conducted well”. Much has been discussed about the issues in conducting the test, including technical snags, last-minute changes and delays, but these are teething issues that will likely be addressed in the next round. Instead, we will focus on what constitutes a good test.

The National Educational Policy 2020 (NEP) seems to have envisioned CUET as a good test. It said that these exams should test “conceptual understanding and the ability to apply knowledge” and that colleges should value the common test due to its “high quality, range, and flexibility”.

When CUET was announced, it was said that the test would be a simple one — specifically one based strictly on the National Council of Educational Research and Training (NCERT) Class 12 syllabus. The papers have followed that approach. While the intention was to eliminate or significantly reduce coaching, this has not worked. CUET witnessed extremely high amounts of coaching in just a few months. Coaching platforms, such as Adda 247, said that they saw “100% growth for our K-12 [Kindergarten-to-Class 12] segment business since CUET introduction.”

A test based only on the Class 12 NCERT syllabus is the opposite of what NEP recommended, based on the mistaken belief that a simpler test will reduce coaching. Coaching is a social phenomenon and will occur whether a test is simple or difficult. An easier test, if anything, will invite more coaching as only higher quality tutors and institutes will be able to offer coaching for a difficult test. Instead of trying to eliminate coaching, CUET paper-setters should focus on developing a good test which tests higher-order skills and is challenging enough for students seeking admission to top colleges.

Incidentally, our board exams have seen a similar dilution in quality for years. This is reflected in the number of students scoring very high marks and college cut-offs often being above 99%. We recently analysed questions in board exams and found most to be mechanical, textbook-based, and straightforward. Questions that are challenging and require a deeper understanding of the subject result in lower scores overall, but importantly, they distinguish between student performance in a useful way for purposes such as entrances to courses or jobs.

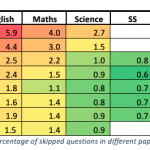

Data from CUET also shows that it suffered from this problem. It was reported, for instance, that about 19,800 students scored 100 percentile. Since about 1.5 million students took the exams, it means about 1.3% scored 100 percentile. In contrast, an average of fewer than 30 students out of about 200,000 students who wrote the Advanced JEE scored 100 percentile (0.015%). When a paper is set strictly based on NCERT Class 12 content, it is not just possible but expected that thousands of students will likely score full marks. This makes CUET ineffective, as its primary function is to help colleges sift through the papers to pick higher-performing students.

Of course, we must not forget that difficult exams often see public protests, while easy exams don’t. So, exam-setting bodies such as the National Testing Agency (NTA) and the central and state boards find it easier to set easy tests. Though ineffective and rife with issues of fairness and longer-term problems, these tests don’t face a backlash. The chairperson of a state board recently told me that the board is often questioned if student performance is lower than in previous years.

The second issue is whether multiple choice questions (MCQ) are appropriate for CUET. Ideally, exams should have a mix of questions, including long answer-type questions where students demonstrate their ability to compose arguments or solve a mathematical problem step by step. However, it is also true that correcting such papers fairly and quickly may not be feasible when the number of candidates is in the hundreds of thousands. Hence, we may need to stick with the MCQ format for some exams of CUET. However, this does not mean that the questions must be recall-based, rote-based, or even straight from the textbook. It is possible to set high-quality MCQ questions that test higher-order thinking and critical thinking skills. MCQs can be easily and rigorously analysed, and an analysis of good papers shows that it contains questions at almost all difficulty levels. Further, the most difficult of these questions — and they are difficult not because they are tedious or lengthy but because they require critical thinking — are challenging for all, including the very best (say, top 0.5%) of candidates. Exceptionally few students — often less than 0.1% — should be able to score full marks easily in a selection test such as CUET.

Overall, many of the parameters of the current CUET are working. However, we must ensure that we create a high-quality test that helps ascertain students with greater critical and higher-order thinking so that they have access to the top colleges in the country. Such a test would be challenging but fair. It would not be based only on the Class 12 NCERT textbook but on applications of the content of Classes 11 and 12. A good – and fair – selection of candidates is the first step toward creating a high-quality higher education system. This, in turn, is the first step to creating a research-and-innovation-based society. CUET has that opportunity, and it is not a very difficult one to achieve.